FreeBSD 8: is it worth to upgrade?

2009.10.05. 12:35

Definitely:

Looks brutal, and it is:

| Test | Peak performance 7.x->8.x |

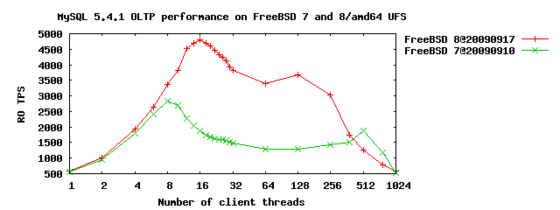

| MySQL OLTP RO | 1.6970x |

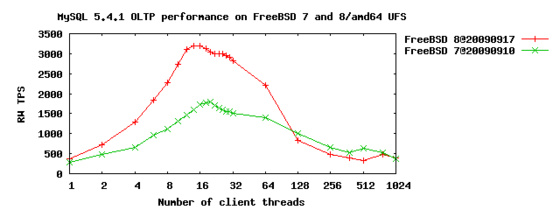

| MySQL OLTP RW | 1.7843x |

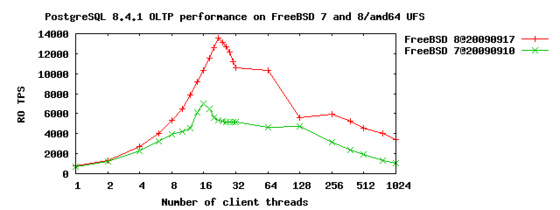

| PostgreSQL OLTP RO | 1.9257x |

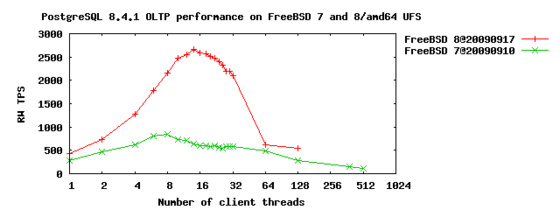

| PostgreSQL OLTP RW | 3.1556x |

If somebody thinks that having a performance increasement of factor 1.5-3x between an OS's two versions in such a complex piece of software, he's probably right. This shows that FreeBSD is far from the multicore heaven, but it gets closer to it with every releases, like Kris Kennaway FreeBSD developer's previous measurements tell us. Not to speak about Linux, which wasn't at the top at the time...

But what causes this massive speedup? FreeBSD 8 have superpages support turned on by default, and there was some hacking on the ULE scheduler too, which now can recognize the CPUs' and their caches' hierarchy and take those into account during its work.

Sadly, this recognization is far from being perfect, because according to FreeBSD 8, our test machine's topology looks like this:

kern.sched.topology_spec: <groups>

<group level="1" cache-level="0">

<cpu count="24" mask="0xffffff">0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23</cpu>

<flags></flags>

<children>

<group level="3" cache-level="2">

<cpu count="6" mask="0x3f">0, 1, 2, 3, 4, 5</cpu>

<flags></flags>

</group>

<group level="3" cache-level="2">

<cpu count="6" mask="0xfc0">6, 7, 8, 9, 10, 11</cpu>

<flags></flags>

</group>

<group level="3" cache-level="2">

<cpu count="6" mask="0x3f000">12, 13, 14, 15, 16, 17</cpu>

<flags></flags>

</group>

<group level="3" cache-level="2">

<cpu count="6" mask="0xfc0000">18, 19, 20, 21, 22, 23</cpu>

<flags></flags>

</group>

</children>

</group>

</groups>

While in practice, it's a whole lot different:

The correct hierarchy comes with a small patch in subr_smp.c, which adds a new topology, which we can set later on -manually:

case 8:

/* six core, 3 dualcore parts on each package share l2. */

top = smp_topo_2level(CG_SHARE_L3, 3, CG_SHARE_L2, 2, 0);

break;

After this modification, the topology looks a lot better:

kern.sched.topology_spec: <groups>

<group level="1" cache-level="0">

<cpu count="24" mask="0xffffff">0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23</cpu>

<flags></flags>

<children>

<group level="3" cache-level="3">

<cpu count="6" mask="0x3f">0, 1, 2, 3, 4, 5</cpu>

<flags></flags>

<children>

<group level="5" cache-level="2">

<cpu count="2" mask="0x3">0, 1</cpu>

<flags></flags>

</group>

<group level="5" cache-level="2">

<cpu count="2" mask="0xc">2, 3</cpu>

<flags></flags>

</group>

<group level="5" cache-level="2">

<cpu count="2" mask="0x30">4, 5</cpu>

<flags></flags>

</group>

</children>

</group>

<group level="3" cache-level="3">

<cpu count="6" mask="0xfc0">6, 7, 8, 9, 10, 11</cpu>

<flags></flags>

<children>

<group level="5" cache-level="2">

<cpu count="2" mask="0xc0">6, 7</cpu>

<flags></flags>

</group>

<group level="5" cache-level="2">

<cpu count="2" mask="0x300">8, 9</cpu>

<flags></flags>

</group>

<group level="5" cache-level="2">

<cpu count="2" mask="0xc00">10, 11</cpu>

<flags></flags>

</group>

</children>

</group>

<group level="3" cache-level="3">

<cpu count="6" mask="0x3f000">12, 13, 14, 15, 16, 17</cpu>

<flags></flags>

<children>

<group level="5" cache-level="2">

<cpu count="2" mask="0x3000">12, 13</cpu>

<flags></flags>

</group>

<group level="5" cache-level="2">

<cpu count="2" mask="0xc000">14, 15</cpu>

<flags></flags>

</group>

<group level="5" cache-level="2">

<cpu count="2" mask="0x30000">16, 17</cpu>

<flags></flags>

</group>

</children>

</group>

<group level="3" cache-level="3">

<cpu count="6" mask="0xfc0000">18, 19, 20, 21, 22, 23</cpu>

<flags></flags>

<children <group level="5" cache-level="2">

<cpu count="2" mask="0x300000">20, 21</cpu>

<flags></flags>

</group>

<group level="5" cache-level="2">

<cpu count="2" mask="0xc00000">22, 23</cpu>

<flags></flags>

</group>

</children>

</group>

</children>

</group>

</groups>

Surely, there are a lot of work to do on the scheduler, because it can be clearly seen that it can't make all the CPUs work as hard as they can. And that affects application performance badly.

A bejegyzés trackback címe:

Kommentek:

A hozzászólások a vonatkozó jogszabályok értelmében felhasználói tartalomnak minősülnek, értük a szolgáltatás technikai üzemeltetője semmilyen felelősséget nem vállal, azokat nem ellenőrzi. Kifogás esetén forduljon a blog szerkesztőjéhez. Részletek a Felhasználási feltételekben és az adatvédelmi tájékoztatóban.